Voice assistants are an everyday tool for customers worldwide. Still, language barriers and physical limitations prevent some audiences from using your services. You can overcome these challenges by building multilingual text-to-speech and speech-to-text functionality into voice assistants with natural language processing (NLP).

Over 142 million people in the US and a significant chunk of European users rely on voice assistant apps daily. According to the Fortune Business Insights 2021 Report, the voice recognition market will grow from $11.21 billion in 2022 to $49.79 billion in 2029.

In this article, we’ll demonstrate how NLP technology in voice assistants can help you reach more audiences, propel user experience, and drive business growth. We’ll also show you how to train and integrate a sophisticated model into your platform.

NLP Technologies in Multilingual Voice Assistants

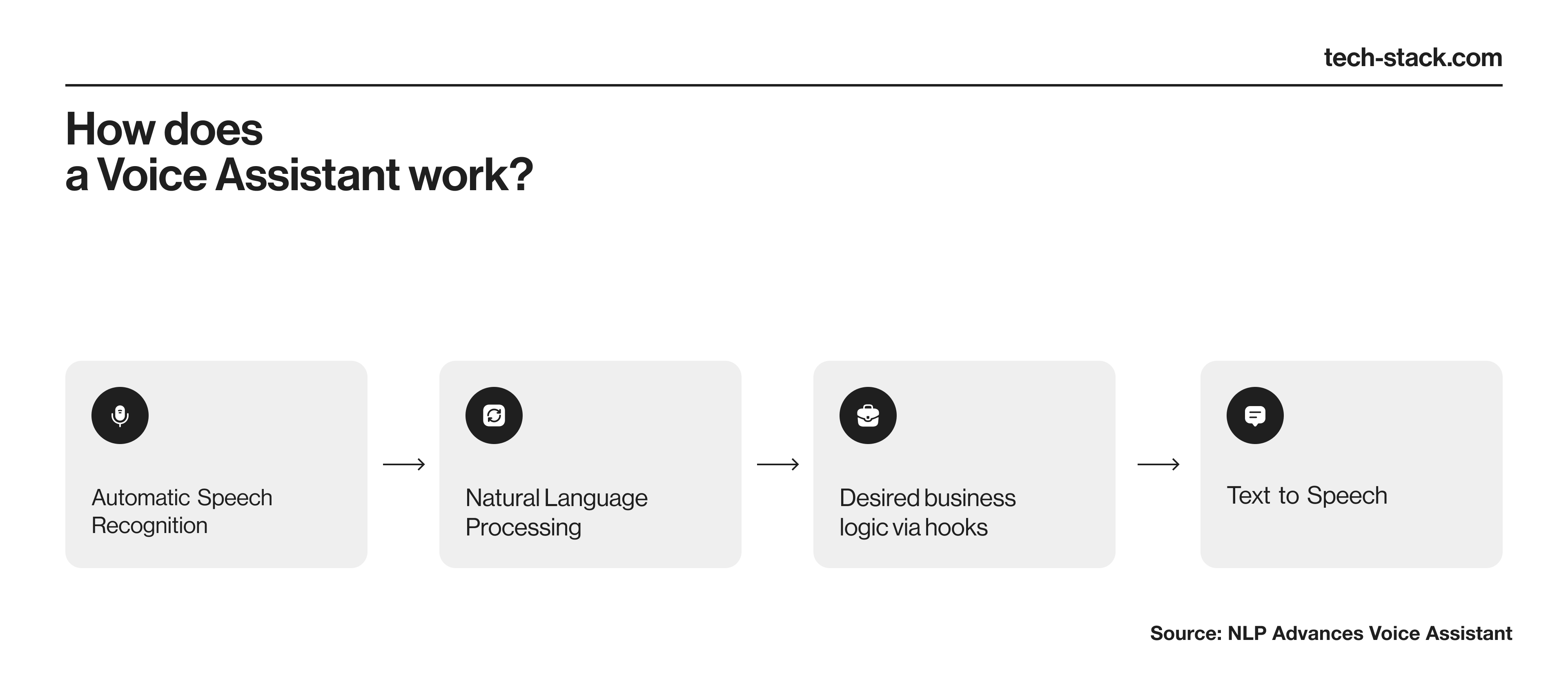

Voice assistants allow computers to interact with human spoken language. To make communications seamless and intuitive, voice assistants rely on a subfield of AI called natural language processing.

Natural language processing

NLP combines machine learning and computational linguistics to analyze, interpret, and understand human language from spoken and written words. This technology lets multilingual voice assistants process and respond to user input.

NLP includes two subsets: natural language understanding (NLU) and natural language generation (NLG). NLU helps computers extract meaning, understand context, identify entities, and establish relationships between words. Voice assistant apps use NLG to produce natural-sounding feedback to users.

Multilingual text-to-speech and speech-to-text

NLP, NLU, and NLG power multi-language text-to-speech (TTS) and speech-to-text (STT) apps that synthesize speech, enable voice control, and provide transcriptions. They also help process audio input to remove noise and enhance relevant speech signals to better recognize pronunciation, intonation, and emotion.

Let’s discuss how these technologies can empower your company using existing and well-implemented examples.

How NLP-Powered Voice Assistants Drive Business Value and User Experience

To help you understand the importance of NLP-powered technologies, we will showcase how others leverage them. This should give you an idea of how they can drive your business value, reduce expenses, and elevate user experience.

Improved speech recognition accuracy

Trained NLP models and audio speech recognition can be integrated into voice assistants, enabling them to provide more contextually relevant and accurate responses. This also improves named entity recognition, which allows your system to recognize important spoken entities (people, organizations, locations, or terms) in large data sets.

A team of Google researchers published a 2021 study on their AI model— SpeechStew. They trained the system on a dataset combining over 5,000 hours of labeled and unlabeled speech data. The benchmarking showed that the new model outperformed previous ones and solved more complex tasks.

Google launched Speech-to-Text API (speech recognition technology) in 2022. Using a neural sequence-to-sequence model, this API boosted their user interface and improved speech recognition accuracy in 23 languages.

Automated note-taking and data entry

Algorithm-based voice recognition reduces time spent on note-taking and manual data entry. For example, NLP software can automatically convert spoken language into text and enter them into an electronic health record (EHR). This allows healthcare professionals to avoid paperwork and take on more patients.

According to Fortune Business Insights, speech recognition software helped companies shift toward contactless services during the COVID-19 pandemic. To improve remote healthcare services, Cisco introduced WebEx—an AI-powered voice assistant that simplifies connecting IoT devices for conferences. It also takes meeting notes and provides real-time closed captions (for patients with hearing impairments).

Multi-language transcriptions

NLP-based technologies make web conferencing much easier with captions and post-call transcripts. They can create meeting minutes from voice data and tabulate key points for later use. Multilingual apps are also critical for globally distributed workforces, as they can translate speech during online meetings.

Speechmatics’s 2021 Voice Report (cited in the Fortune Business Insights paper) states that 44% of the voice technology market accounts for web conference transcriptions. Amazon Transcribe is one of the most popular solutions for call transcripts and real-time transcriptions. Another popular tool includes iFLYTEK’s multilingual AI subtitling tools that support over 70 languages.

Secure voice-based authentication

Multi-factor authentication with voice biometrics enables seamless and secure verification. Instead of relying on passwords that anyone can steal and use, your system can identify a user based on unique vocal characteristics. This also saves support agents from lengthy authentication processes when users contact them.

Nuance incorporated biometric security for its support channel. It analyzes the person's physical and speech delivery factors with a 99% success rate in half a second. By automating the authentication process, the company reduced the handling time for an average call by 82 seconds. The company also uses speech-based authentication to detect fraud with 90% accuracy, which translates into over $2 billion of losses prevented every year.

Multilingual text-to-speech

NLP and TTS technologies can help voice assistants mimic human speech in a way that reflects a brand’s personality. Microsoft Azure Text-to-Speech is a customizable voice generator that creates a unique synthetic voice using your audio data as a sample.

These technologies also improve accessibility for people with physical impairments and reading disabilities. It’s done by converting written text in your apps or website into audible speech, so they can navigate and use your services. Popular examples include Dragon NaturallySpeaking speech recognition software and Google’s TalkBack screen reader.

Customers often use multilingual text-to-speech apps to listen to textual content on the go in their preferred language. NaturalReader is an excellent example of a cloud-based speech synthesis app that can read books, documents, and webpages in over 50 voices and nine languages.

Customer sentiment analysis

NLP models can improve sentiment analysis by accurately determining the emotion behind words. Your system can identify overall tone, intent, and connections in texts and speech data to respond to customers appropriately. The most frequent use case would be conversational AI in Google Assistant, Amazon’s Alexa, and Apple’s Siri.

Your system can monitor general customer sentiment by analyzing social media and review platforms, including publicly posted videos. This lets you identify what customers like or dislike about your services, predict chain disruptions, and identify bugs. As a result, you may proactively address major concerns online before they escalate.

Repustate can analyze customer and employee feedback from audio and video content in over 23 languages and dialects. For another example, major US-based low-cost airline JetBlue integrates real-time voice analytics to analyze contact center conversations and determine the most-suggested responses for customer inquiries.

Speech-based online shopping

Voice assistants allow for more engaging, personalized, and frictionless online shopping. It’s easier to talk to a smartphone or smart speaker to search for products, ask for recommendations, check relevant deals, and purchase. Multilingualism further enhances the experience by localizing to the customer’s language and context (like pricing and currency).

According to Statista, about 60% of US online shoppers in 2021 purchased daily or weekly via smart home assistants, and nearly half used mobile assistants for online shopping. This supports the prediction that the global e-commerce transaction value will rise from $4.6 billion in 2021 to $19.4 billion in 2023 (over 400% of growth).

Amazon’s Alexa is the prime example of voice-based shopping done right, letting users find, buy, check out, and compare products using voice commands. Another company, Slang Labs, integrated NLP, automatic speech recognition (ASR), and TTS in a multilingual voice search app to let customers search for products using a variety of languages.

Interactive voice response customer service

IVRs (Interactive Voice Response) and conversational AI can be a huge time and money-saver. They recognize customer intent from spoken and written works, providing guidance 24/7 in multiple languages. If the query requires a live agent, the IVR will quickly escalate it.

Microsoft and Nuance united to power an interactive voice response solution that can swiftly resolve most incoming calls. It provides a great degree of personalization, changing styles and predicting customers’ needs based on the conversation’s tone and context. This IVR handles 50% of about 4 million monthly calls without involving an agent, translating to over $14 million in annual savings.

In 2022, Verint launched a virtual assistant platform powered by Da Vinci AI and Analytics. It uses natural language understanding, speech recognition, and sentiment analysis to craft customer self-service experiences. The company states that it helped its clients reducecall transfers by 20% immediately after deployment, leading to a 50% yearly growth in self-service.

Have we convinced you to try NLP for voice assistant apps? Let’s break down how you can integrate these technologies into your system.

Practical Case: Creating a Simple Voice Assistant with Code Implementation

Are you curious about the possibilities of building a voice assistant? In this practical case, we will guide you through the process of creating a simple voice assistant using Python code. You will learn how to record and recognize voice commands, as well as process and respond to them using a custom algorithm.

Download Now

Guide for Integrating NLP in Multilingual Voice Assistants

Based on our expertise, this is how businesses should implement NLP-powered technologies and language models into voice assistants.

Step 1. Select the optimal NLP technology stack

Begin by analyzing your existing IT architecture, business model, and target audience. Choosing an NLP technology stack that aligns with your business goals, requirements, and technical specifications is essential. Here are the aspects you should consider:

- Application. A personalized shopping assistant, transcription service, or an IVR may require vastly different AI tools, IoT devices, and NLP models. Choose a technology stack that specializes in or supports your desired application.

- KPIs. Identify key performance indicators, such as information accuracy, response time, and language support.

- Accessibility. Prioritize user-friendly solutions that provide a seamless experience for more customers. Consider how your business can support people with impairments or disabilities.

- Scalability. A technology stack should have the potential to grow with your customer base and future business requirements.

Considering these factors, you can decide on tools and platforms that deliver better results in less time.

Step 2. Collect and prepare sample data

You need a diverse dataset of samples to train your NLP model. This includes collecting speech samples covering various topics and contexts relevant to your industry and business type. When mining for data, pay attention to these factors:

- Credibility. The source should be trustworthy enough, so you can minimize the risk of inaccuracies and inconsistencies in the training data.

- Accuracy. Verify that the collected data doesn’t have transcription or translation mistakes (at least for the key terminology). Notably, accent and dialect are the main challenges for ASR.

- Relevance. Collected speech samples should cover the type of requests and language your voice assistant will deal with the most.

- Biases. Datasets can overrepresent or underrepresent specific topics or dialects. You should also ensure the model doesn’t train on data that includes racial or gender stereotypes.

Training might require the processing of terabytes of documents, audio, and video content. Of course, you may use pre-existing models already trained on data sets (like Google's Cloud Natural Language API). But this might lead to inconsistent results, plus the limitations described above.

For the best performance of multilingual text-to-speech and speech-to-text apps, you should collect and curate the training data yourself. Our company can help you mine clean, relevant, and accurate samples to build a robust NLP model.

Step 3. Train and test the NLP model

The collected data must be prepared and used to refine your NLP model for a multilingual assistant. Let’s divide this process into smaller steps:

- Filter the samples. Typical models can’t differentiate between useful and irrelevant information. Techstack and some similar companies offer AI services and machine learning to filter the fluff from your datasets.

- Pre-process the data. Relevant data should be free of grammatical and punctuation errors, etc. Next, you must transform the datasets into a format compatible with your NLP model.

- Extract features. Identify and extract features that will help the NLP model recognize speech patterns. This includes individual words, character frequencies (letters, punctuation marks, other language-specific traits), N-grams (continuous word sequences), and embeddings (semantic meaning).

- Train the NLP model. Too much training may lead to overfitting—making the model “memorize” the data instead of learning the language patterns. To prevent this, you can use techniques such as cross-validation, hyperparameter tuning, and regularization during the training process.

- Evaluate performance. Test the model to verify it can accurately identify speech. Homophones—identical-sounding words that mean different things—require special attention.

You should further optimize the NLP model for your voice assistant. This involves adjusting the input and output formats and linking the model to the functions your voice assistant is designed to perform.

Step 4. Implement NLP into the voice assistant

Integrate a validated NLP model into your voice assistant. This stage involves adding code that enables the model to analyze voice commands and generate responses. The process should look like this:

- A user speaks to the voice assistant app in the preferred language.

- The app converts speech to text using ASR.

- Text is passed to the NLP model for analysis.

- The NLP model processes the text and generates a response.

- Voice assistant presents a response using multilingual text-to-speech technology or displays the information on the screen (or both).

We recommend validating the voice assistant before deploying it for the public. Techstack achieves this with the Minimum Viable Product (MVP) software development approach: we create a prototype with core NLP functionality, so you can test it on your audience with minimal development costs.

Step 5. Iterate and improve the model

Successful NLP-powered voice assistants rely on continuous improvement. Your team should maintain optimal performance, fix bugs, and roll out security updates. Monitor user feedback to identify areas for improvement.

Regularly test new algorithms, hyperparameters, and training data. This iterative process enables your model to adapt, learn, and improve its accuracy and performance for certain tasks.

Future Directions

Enhanced customer experience is the driving factor for the popularity of NLP. Multilingual text-to-speech apps and voice assistants powered by these technologies give customers more ways to engage with the brand and reach a broader demographic.

The successful implementation depends on expertise. Your team should prepare the correct datasets, train the NLP models correctly, and integrate the solution in an intuitive app.

Our team is well-equipped to develop voice assistants, whether you want to build your software from scratch or enhance it with a sophisticated NLP model. Ready to supercharge your customer interaction? Contact us to start your transformative journey together.